AI in Education: Between Promise, Panic and the Terms & Conditions Nobody Reads.

Education, as an industry, has always been resistant to change. Whether at an institutional or individual level, innovation and new approaches looked good on paper but the reality proved otherwise. That’s exactly what makes the sudden rise of AI in education so striking. So what makes this time different?

Somehow AI went from niche tech jargon to the word we hear more often than our own names. New tools appear every day, some brilliant and others very questionable, all marketed like they’re about to reinvent humanity by Friday. Suddenly every edtech startup promises to personalize learning, reduce workloads, democratize knowledge, and somehow change the world. And as a user, if you’re not adopting at least two of these tools, you’re instantly seen as outdated. Worse, you’re framed as the slow one in the tech race.

Because how do you push back against promises that sound this irresistible? “AI will personalize learning. AI will reduce teacher workload. AI will improve student outcomes. AI can teach you anything and everything” The kind of claims that make it seem like every challenge, mismatch, overload, gap, and bottleneck in education can finally be solved, almost as easily as updating an app. All of the sudden, the technology isn’t just helpful, it’s positioned as the solution to every learning problem we’ve had since the invention of chalk.

When the story sold doesn’t match the story lived

As someone who works in marketing, I know how these stories are crafted. You don’t sell AI by saying, “It’s a tool that might help, depending on capacity, training, infrastructure, and data governance.”

You sell it by saying: “Revolutionize learning.”

Cue the shining animations and the promise that teachers will suddenly have time to breathe — while students become more engaged, parents feel reassured, and educational institutions finally believe they’ve found the “future-proof” solution they’ve been waiting for. This is where the cracks begin to show, not because AI is bad, but because the story skips all the boring (but necessary) complications: training, ethics, bias, privacy, consent, digital literacy, infrastructure, and the simple fact that humans do not update as quickly as software versions.

AI can absolutely support teachers when it’s implemented thoughtfully. But it’s not a plug-and-play solution; it’s more of a :

The marketing promise is convenience. The reality is a change many schools aren’t ready for. And if the steady rise of edtech solutions over the past decade has taught us anything, it’s that education still struggles to adopt even the most mainstream tools. Beyond the occasional use of the popular OpenAI platforms, the gap between what’s available and what’s actually integrated into classrooms is proof that the bridge hasn’t been crossed yet.

The ethical shadows we don’t always look at

Before the demos and the glossy brochures, this conversation was already happening at the policy level. Because concerns around AI in education aren’t new at all. In fact, they’re serious enough that UNESCO published a 50-page report in 2021 called AI and Education: Guidance for Policy-Makers, a document meant to help governments and institutions stay grounded while the tech world sprints ahead. The report makes one thing very clear: AI’s promise comes packaged with conditions. It highlights the gap between what the technology can do in theory and what education systems are actually ready for. It warns about data privacy, algorithmic bias, unequal access, and the uncomfortable reality that policy frameworks and teacher readiness often lag far behind the tools being sold. In other words, even at the highest global level, the message is the same: AI can support learning, but only if we approach it with governance, transparency, and a very realistic understanding of its limits.

Coming from a personal experience, my first real attempt at building an edtech product was a platform called Tuto. The idea was simple: connect students with older peers or recent graduates who could help them navigate subjects, exams, and school life. It came from a real pain point and a genuine desire to make things easier for learners. But building it quickly revealed a harder truth: good intentions don’t automatically translate into safe or ethical solutions. Because when your users are mostly middle and high school students, everything becomes more delicate. These are minors. Their safety isn’t just important, it’s non-negotiable. Questions around safety, privacy, and accountability grow louder especially in communities where parents aren’t always familiar with digital risks or how online platforms actually work. Good intentions from an entrepreneurial mindset don’t always guarantee safe or practical outcomes.

And that’s the part of the AI conversation that often gets sidelined. While institutions chase efficiency and innovation, a quieter conversation lingers underneath, one with very real consequences:

Student data being collected without clear or informed consent.

Algorithms reinforcing biases in grading, admissions, or recommendations.

AI systems storing more information than anyone realizes or can properly oversee.

Parents assuming safety because a brochure said the tool was “secure.”

Teachers being pressured into using systems they don’t fully trust — or absorbing extra workload with no real support.

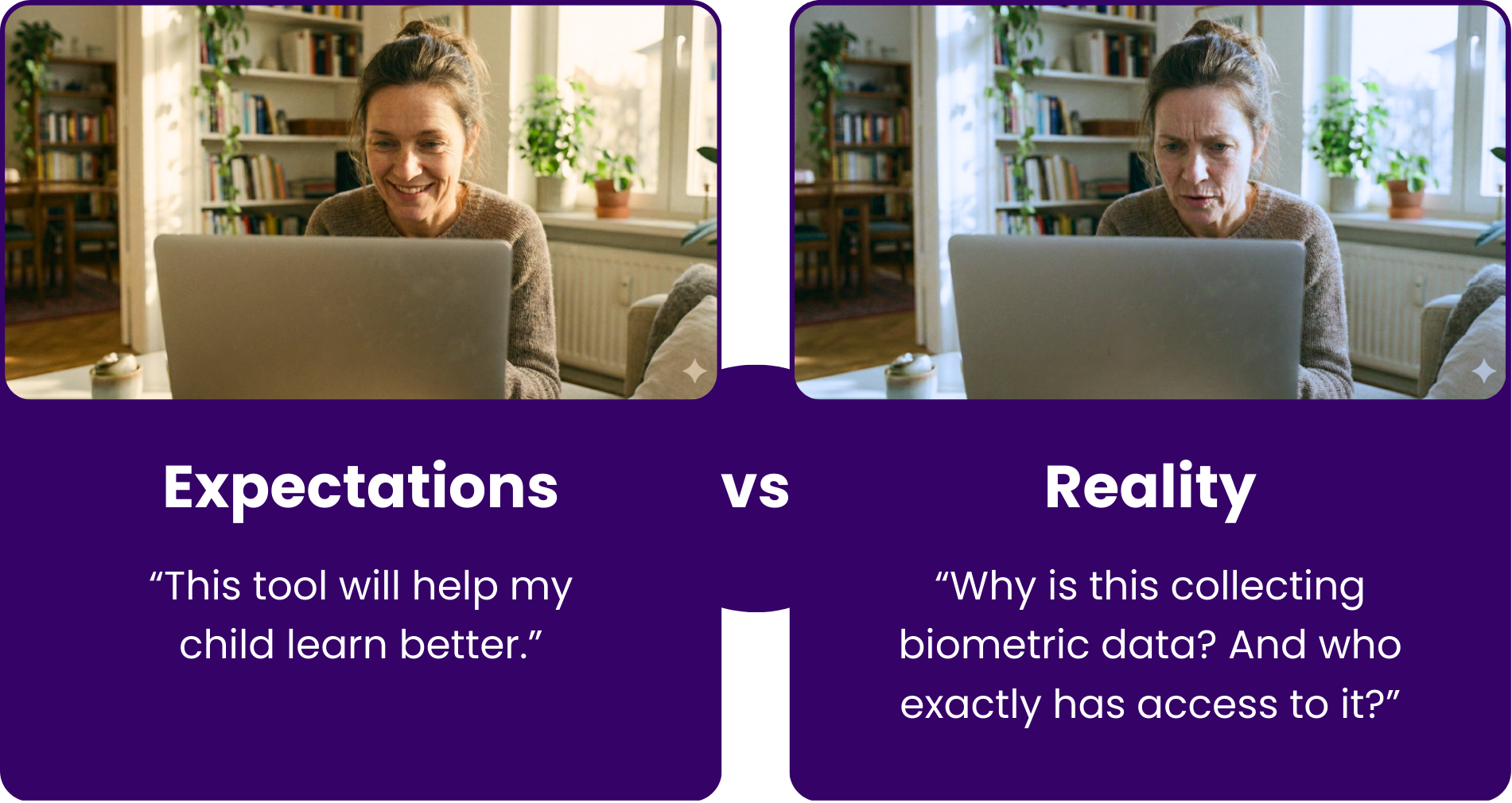

Parents’ reactions while exploring the details of the AI education tools.

We talk about AI as if it’s neutral but it’s not. It’s built by humans, trained on human data, and deployed into systems already shaped by inequality. So when AI enters a classroom, it doesn’t just bring efficiency or insight, it actually brings questions. And ignoring those questions doesn’t make them go away; it only makes them harder to answer later.

Where AI actually help:

Some moments feel close to magic

Not everything is doom and fine print. For all the complications, there are moments where AI becomes practical. When used with intention, it can support the everyday realities of teaching and learning in ways that feel genuinely meaningful. It can take over repetitive tasks, help teachers understand student patterns, and make learning more accessible without drawing attention to students who already feel singled out.

AI can be extraordinary when used with intention:

A student with dyslexia hearing text read aloud without needing to ask for help.

A shy student gaining confidence from instant, judgment-free practice feedback.

A teacher using AI-generated summaries to quickly spot where an entire class is struggling.

Lightening teachers’ workload by handling the repetitive, time-consuming tasks that never make it into job descriptions.

Even something as simple as visualizing students in their dream jobs, like the viral trend on TikTok, to boost motivation in a fun, creative way.

None of this replaces teachers or rewrites pedagogy overnight, but when aligned with clear goals and responsible oversight, AI can act as a supportive layer improving what humans already do well and filling in the gaps where time, resources, or capacity fall short.

The two stories: Promise vs Practice

AI in education is not about just AI software integrations; it’s about everything institutions hope those implementations will magically solve. They hope that these tools will finally lighten the load. Perhaps AI can re-engage the students who’ve mentally checked out. Or maybe find ways to stretch the limited budgets a little further. And honestly, who can blame them? When you’re balancing rising expectations with shrinking resources, even a hint of relief feels worth chasing.

But AI doesn’t work on hope alone. It only delivers when the foundations are solid and most importantly, when teachers get continuous training, when data policies are actually transparent, when educators have a real voice in decision-making, and when everyone understands what the tool can and cannot do.

Expectation: AI transforms learning.

Reality: AI mirrors the system it enters.

And if that system is underfunded, overwhelmed, or unprepared, AI doesn’t magically fix it. It just becomes another layer of complexity stacked on top of everything else.

After the hype and buzzword, what’s next?

AI in education doesn’t need dramatic marketing, it needs honesty. Because at the end of all the demos, promises, and glowing pitch decks, the real questions linger quietly in the background. Are we adopting tools, or are we buying optimism? What would responsible AI look like if the narrative matched the reality of classrooms, budgets, and the people who hold the system together? And maybe the biggest provocation of all: what would shift if we finally told the truth about the work behind the technology, the training, the policies, the human judgment instead of pretending innovation arrives fully formed?

If there’s a future where AI truly strengthens education, it will be built on that honesty. Not marketing. Not hype. Not shortcuts. Just the courage to see the tool for what it is, and the wisdom to use it well.